For any readers who may not know, the conductor (that person in a suit who stands in front of an Orchestra gesticulating wildly at them) has the job of directing the players' performance. With the right hand, a conductor denotes the speed at which they want the music to be played - controlling the ebb and flow of the tempo for maximum musical effect. With the left hand, they usually signal to specific players (or sections) to adjust the volume at which they are playing, or to perhaps suggest a way in which to deliver the notes (most conductors have, in my experience, made a great many strange and vaguely threatening gestures toward their ensembles by the end of their first year).

Anyone who has read my previous posts will have seen some videos of my gesture recognition system controlling some simple action. In this project, I have attempted to take it further, and make some initial forays into the world of VR music by making an interface for adjusting the placement and volume of the various instruments in one of my early compositions (I'm calling it an early composition to try and excuse how awful and repetitive it is).

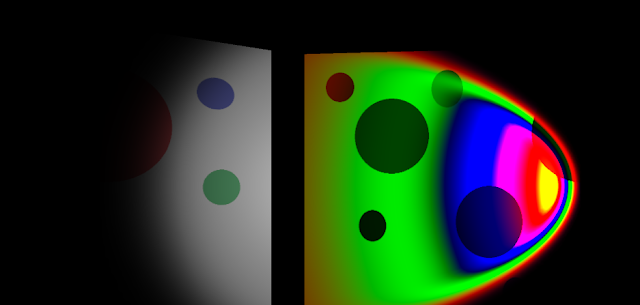

As you will see, the project consists of 10 orbs - one for each instrument, each of which displays the waveform of its audio output. By physically arranging these audio-orbs, the user is able to adjust the way the performance sounds - and to familiarize themselves with each instrument's part.

This is the most foundational set-up for this system, and I will be adding more and more features to it. For example, rather than having the distance of each orb control that instrument's volume, it is possible to instead re-scale the orbs with gestures (as per my previous videos), and have the size affect the volume. This would allow us to associate distance with Reverb saturation (so that, as one might expect in real life, the farther away the orb is, the more its output reverberates and is delayed).

That is another fairly simple use-case for this project. There are a lot of ways it could be expanded and developed further - hopefully, more on that in a future post.

Anyway without further ado, here is the video of one of my pieces being performed in the app.

(I have tried to make it as interesting as possible, but feel free to skip through).

As always, thanks for reading! Stay tuned for more posts and projects!